Radhesh started speaking,

Hello Everyone this is the new blog about the project named as Dog Breed Classification,this project is based on CNN(convolutional neural network).

Description of Dataset:

Here we have a 10,000 image dataset of various Dog Breeds and each breed is a seperate class ,there is total 120 classes are present in the dataset

- I downloaded the Dataset from:

Platform discription:

After downloading the dataset ,i had imports the dataset into a Google Drive, Now,you have a question in your mind that Heyy! Radhesh Why you used a Google Drive If you can easily store it on your pc?

The answer is: i had to use a Google colab .

Google Colab: Colaboratory, or “Colab” for short, is a product from Google Research. Colab allows anybody to write and execute arbitrary python code through the browser, and is especially well suited to machine learning, data analysis and education.

- But the sole benifit is it provides a Free GPU For Faster Processing

Starting Of Project:

- Imporing necessory Libraries:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

in this project i had used a Tensorflow and Keras

If you Want to know more about tensorflow and Keras, please visit here

- Importing Tensorflow and Keras:

import tensorflow as tf

#Importing tensorflow_hub

import tensorflow_hub as hub

print("version of tensorflow:",tf.__version__)

print("version of tensorflow_hub:",hub.__version__)

#checking for GPU

print("GPU","AVAILABLE :)"if tf.config.list_physical_devices("GPU") else "not Available :(")

Output:

- version of tensorflow: 2.4.1,

- version of tensorflow_hub: 0.12.0,

- GPU AVAILABLE :)

After importing the necessary libraries

I setuped the Path For Dataset ,so i can import it to my colab notebook

path='drive/MyDrive/dog-vision/train/'

Read the dataset:

lable=pd.read_csv('/content/drive/MyDrive/dog-vision/labels.csv')

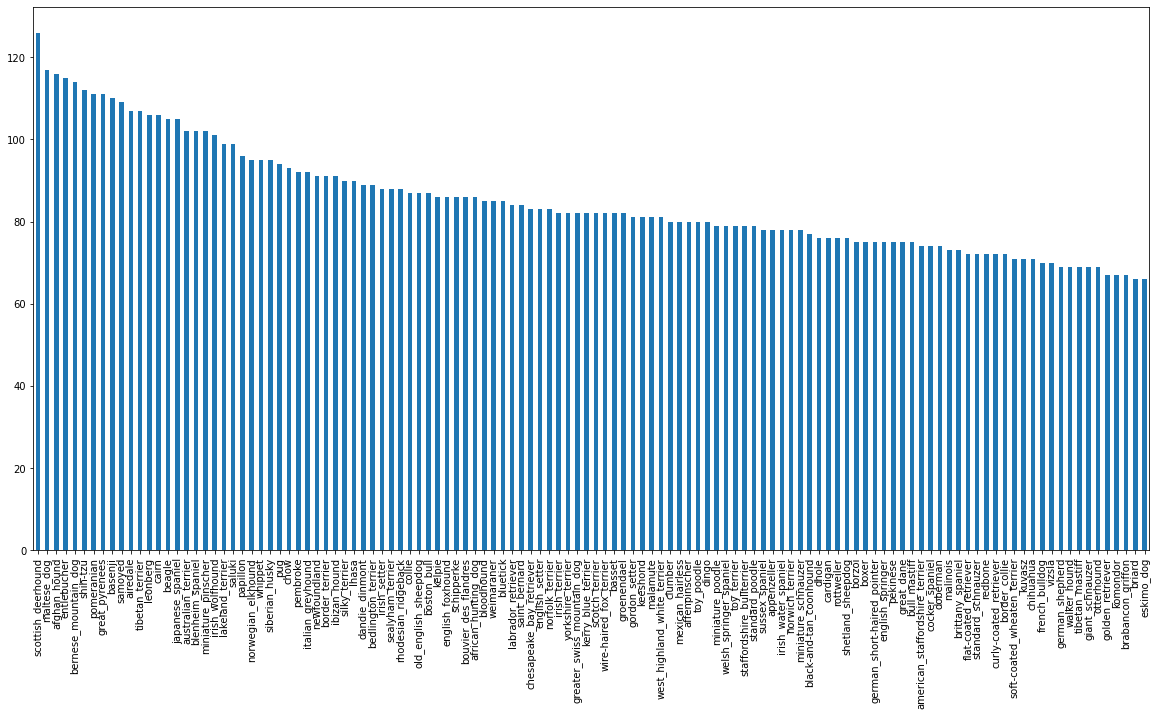

Let's check the dataset by visualization:

lable['breed'].value_counts().plot.bar(figsize=(20,10));

Output:

CHECKING THE FILENAME MATCHESH THE NO. OF DATA IN TRAIN FOLDER:-

filename=[path + fname + '.jpg' for fname in lable['id']]

len_file=filename[:10]

import os

if len(os.listdir(path)[:10])==len(len_file):

print("length of data in filename matched with actual data in train folder")

else:

print('not matched')

Output: length of data in filename matched with actual data in train folder

- Storing the labels of dataset:

lables=lable['breed']

lables

Output:

0 boston_bull 1 dingo 2 pekinese 3 bluetick 4 golden_retriever ...

10217 borzoi 10218 dandie_dinmont 10219 airedale 10220 miniature_pinscher 10221 chesapeake_bay_retriever Name: breed, Length: 10222, dtype: object

Now, Converting the labels to an array

lables=lable['breed'].to_numpy()

lables

Output:

array(['boston_bull', 'dingo', 'pekinese', ..., 'airedale', 'miniature_pinscher', 'chesapeake_bay_retriever'], dtype=object)

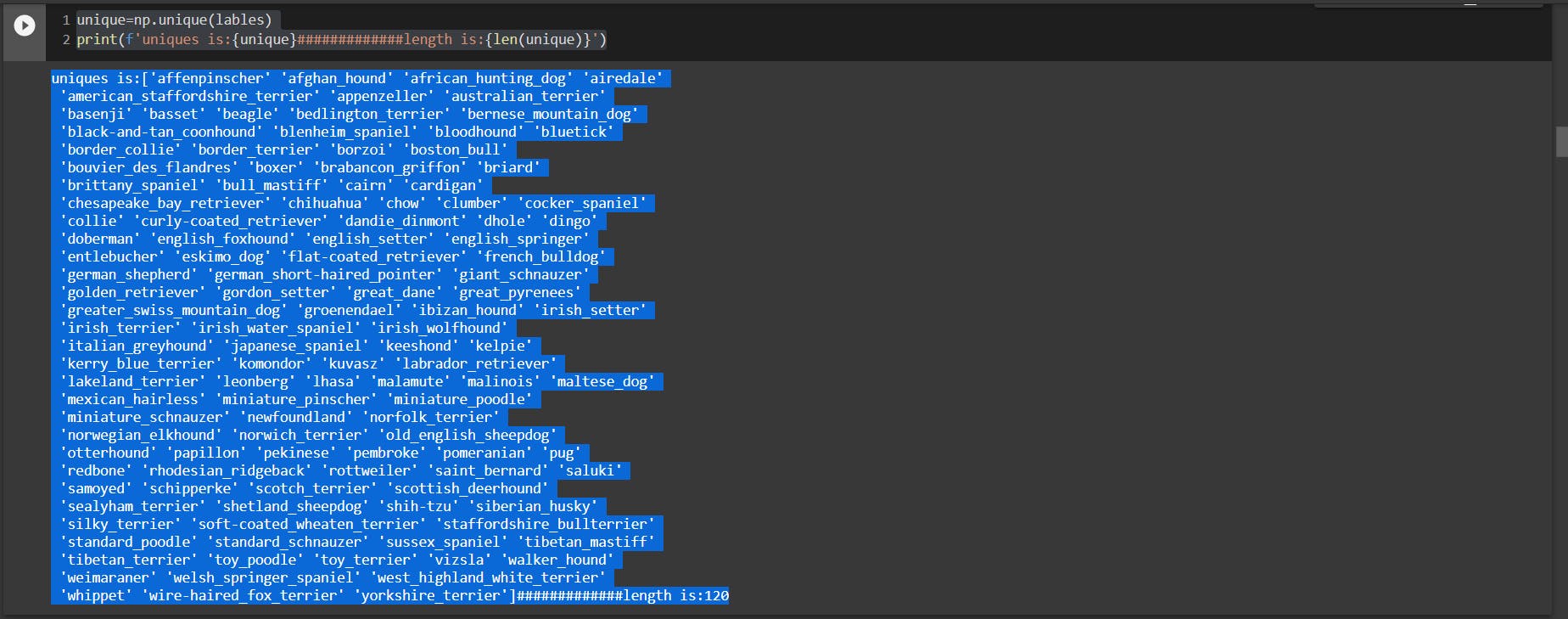

- Creating a set of unique labels:

unique=np.unique(lables)

print(f'uniques is:{unique}#############length is:{len(unique)}')

Output:

From above image we can also verified that there is 120 unique classes

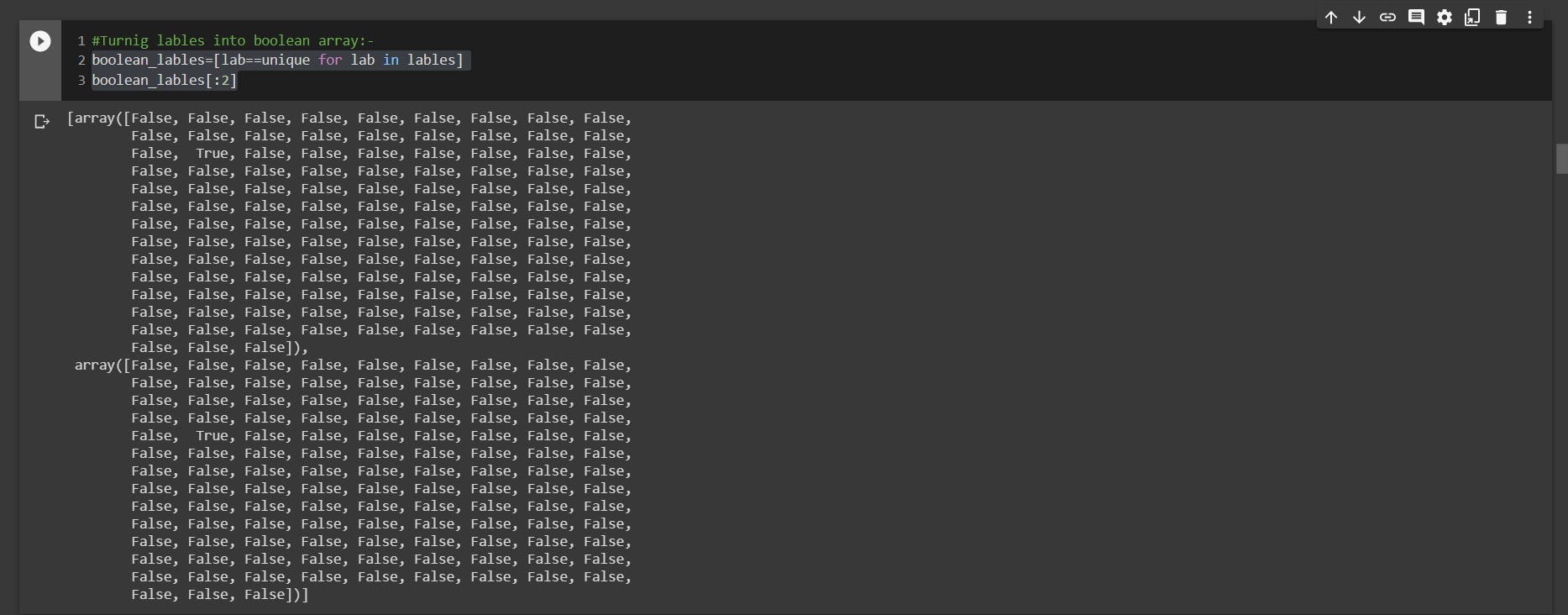

Turning labels into boolean array:

boolean_lables=[lab==unique for lab in lables]

boolean_lables[:2]

Output:

Creating Validation Set of Data:-

Creating and setting up our x & y variables:-

x=filename

y=boolean_lables

Now,splitting the validation dataset into train and test :-

from sklearn.model_selection import train_test_split

X_train,X_val,y_train,y_val=train_test_split(x[:NUM_IMAGES],

y[:NUM_IMAGES],

test_size=0.2,random_state=42)

len(X_train),len(X_val),len(y_train),len(y_val)

Output:- (800, 200, 800, 200)

After splitting we have to convert the image dataset into tensor so it can bo fittable into our tensorflow model To do this we have to write :

from matplotlib.pyplot import imread

image=plt.imread(filename[42])

image.shape

Output: (257, 350, 3)

Now,lets create a function to to turn it into (224,224)

IMG_SIZE=224

def preprocess_img(image_path):

'''takes image file path and turn it into tensor'''

# read img path into image

image=tf.io.read_file(image_path)

# turn thr jpg image into Tensors:-

image=tf.image.decode_jpeg(image,channels=3)

#Convert the color channel value into 0-255 to 0-1

image=tf.image.convert_image_dtype(image,tf.float32)

#Resize the image:-

image=tf.image.resize(image,size=[IMG_SIZE,IMG_SIZE])

return image

Now,Let's create a function which return a tuple(image,lable):-

def get_image_lable(img_path,lables):

image=preprocess_img(img_path)

return image,lables

- Create a Batch of dataset:

BATCH_SIZE=32

def create_batch(X,y=None,batch_size=BATCH_SIZE,valid_data=False,test_data=False):

if test_data:

print('creating test_data batches....')

data=tf.data.Dataset.from_tensor_slices(tf.constant(X))

data_batch=data.map(preprocess_img).batch(BATCH_SIZE)

return data_batch

elif valid_data:

print('creating valid_data batches...')

data=tf.data.Dataset.from_tensor_slices((tf.constant(X),

tf.constant(y)))

data_batch=data.map(get_image_lable).batch(BATCH_SIZE)

return data_batch

else:

print('create training_batch....')

data=tf.data.Dataset.from_tensor_slices((tf.constant(X),

tf.constant(y)))

data=data.shuffle(buffer_size=len(X))

data_batch=data.map(get_image_lable).batch(BATCH_SIZE)

return data_batch

train_data=create_batch(X_train,y_train)

val_data=create_batch(X_val,y_val,valid_data=True)

Checking our Batch:

train_data.element_spec,val_data.element_spec

Output:-

((TensorSpec(shape=(None, 224, 224, 3), dtype=tf.float32, name=None), TensorSpec(shape=(None, 120), dtype=tf.bool, name=None)), (TensorSpec(shape=(None, 224, 224, 3), dtype=tf.float32, name=None), TensorSpec(shape=(None, 120), dtype=tf.bool, name=None)))

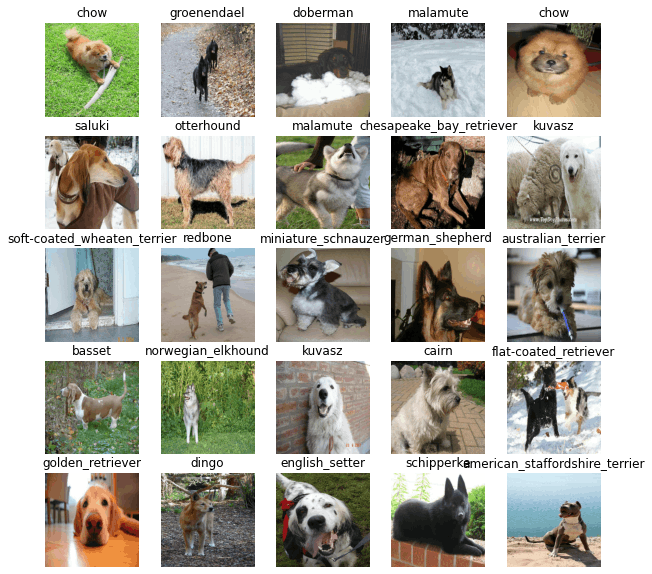

- Visualising the DataBatch:-

def show_img(images,lable):

'''

Displayes a plot of images from data batch

'''

plt.figure(figsize=(10,10))

for i in range(25):

ax = plt.subplot(5,5,i+1)

plt.imshow(images[i])

plt.title(unique[lable[i].argmax()])

plt.axis('off')

train_images,train_lables=next(train_data.as_numpy_iterator())

show_img(train_images,train_lables)

Output:-

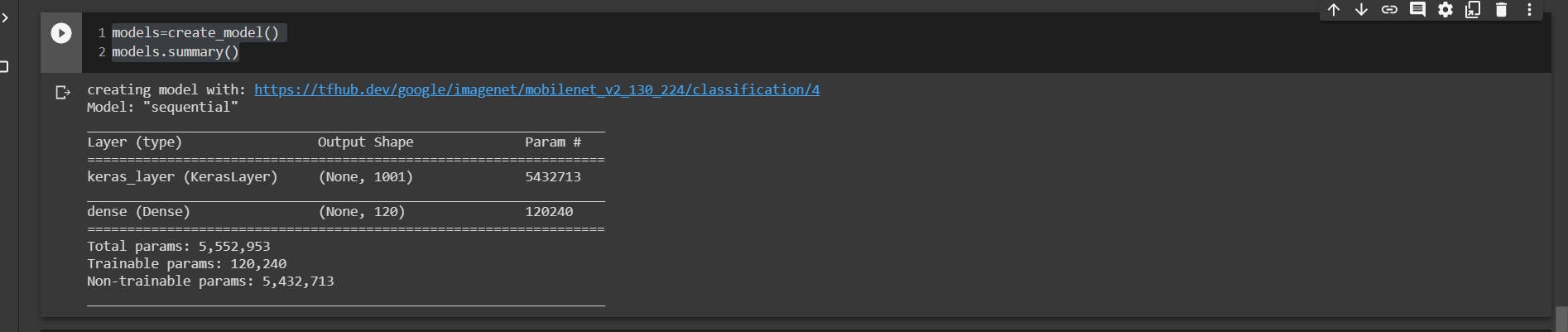

Building Model:-

To building the model i had used the imagenet(it is the pretrained model for boosting the performance and accuracy of our own model) .

To use this copy and paste the link:-

#input shape:-

INPUT_SIZE=[None,IMG_SIZE,IMG_SIZE,3]#batch ,height ,width,color-channel

#output shape:-

OUTPUT_SIZE=len(unique)

#setup Model url from tensorflow_hub:-

MODEL_URL='https://tfhub.dev/google/imagenet/mobilenet_v2_130_224/classification/4'

def create_model(input_shape=INPUT_SIZE,output_shape=OUTPUT_SIZE,model_url=MODEL_URL):

print('creating model with:',MODEL_URL)

model=tf.keras.Sequential([

hub.KerasLayer(model_url),#1st layer input layer

tf.keras.layers.Dense(units=output_shape,activation='softmax')#output layer

])

model.compile(

loss=tf.keras.losses.CategoricalCrossentropy(),

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy']

)

model.build(input_shape)

return model

models=create_model()

models.summary()

Output:-

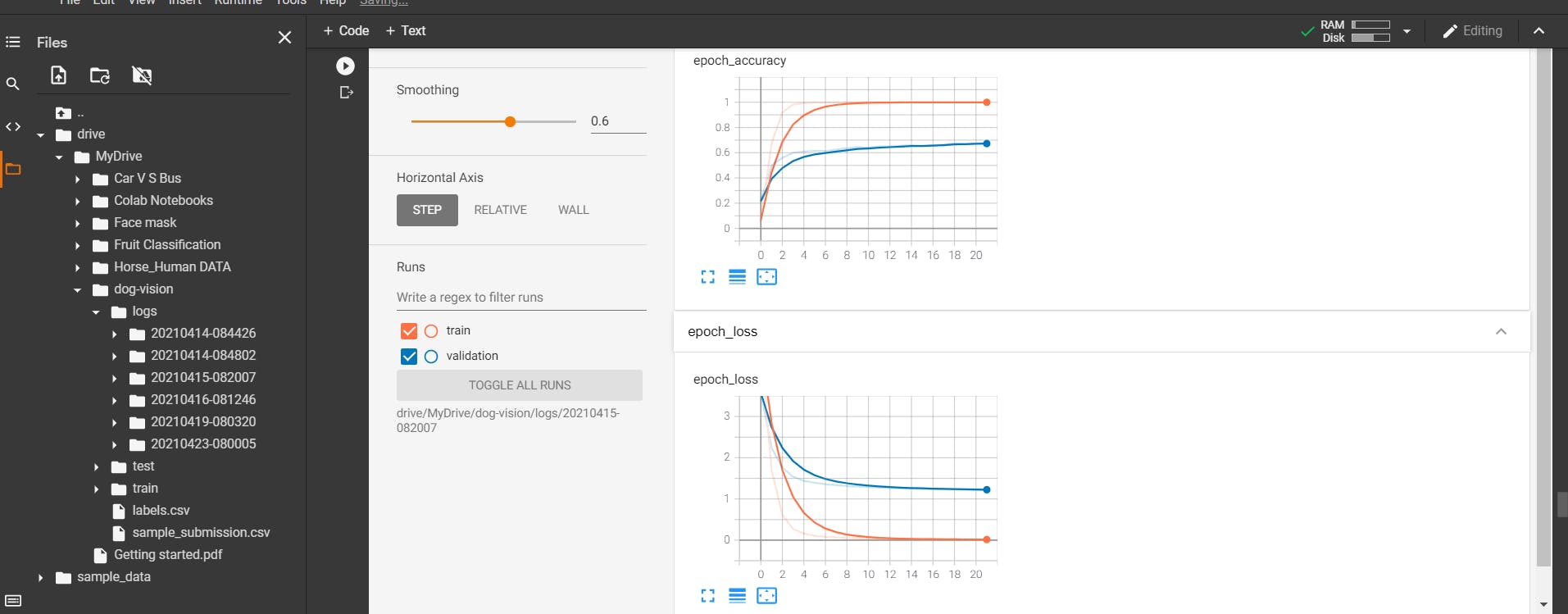

Now,creating the logdirectory for tensorbord:-

Loading the tensorbord:-

%load_ext tensorboard

import datetime

import os

def create_tb_callback():

log_dir=os.path.join('/content/drive/MyDrive/dog-vision/logs',

datetime.datetime.now().strftime("%Y%m%d-%H%M%S"))

return tf.keras.callbacks.TensorBoard(log_dir)

create early stopping callbacks:-

early_stopping=tf.keras.callbacks.EarlyStopping(monitor='val_accuracy',

patience=3)

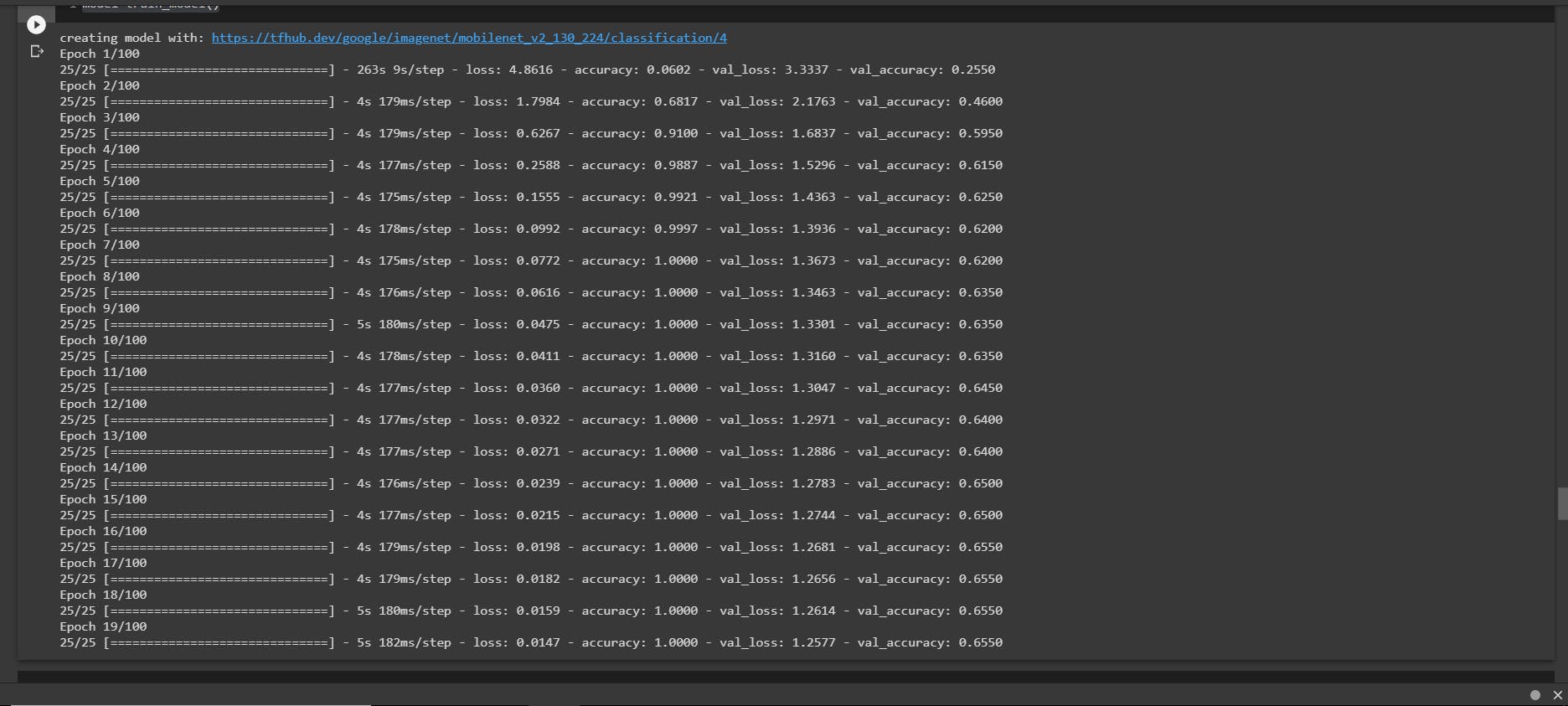

Now,fit the datasets into our model:-

#creating a function to train model:-

def train_model():

model=create_model()

tensorboard=create_tb_callback()

model.fit(x=train_data,

epochs=NUM_EPOCHS,

validation_data=val_data,

validation_freq=1,

callbacks=[tensorboard,early_stopping])

return model

model=train_model()

Output:-

So,our model is now,trained with good accuracy :)

Loading Tensorboard result:-

So,this the end of my project "Dog breed Classification" hope you will like it, next time i will came back with new projects till then Thank you :)